Department Chair/Program Head

Assessment of program learning outcomes is coordinated by the academic program or department. This is often the role of the Department Chair or Program Head unless the Unit has delegated these responsibilities to an Assessment Coordinator or Assessment Committee. Assessment and Accreditation provides support for this process.

The Department Chair/Program Head assessment role includes the following tasks:

Developing and Coordinating the Assessment Plan

A minimum of one program learning outcome should be assessed each year with all program learning outcomes assessed over a three-year period. The Department Chair/Program Head is tasked with deciding what the assessment cycle looks like for the program. The schedule may be the same for all majors/degrees in the department or may vary by specific ‘program of study’ (major/degree level). Faculty will need to know which program learning outcome they will contribute data for. Communicating the assessment cycle in advance helps faculty plan too.

Some programs are required to collect data on all outcomes for a programmatic accreditor or other requirement. However, for the purposes of the University of Idaho’s systematic assessment process, programs are afforded the flexibility to develop a plan that is manageable and encourages meaningful engagement with the data being collected. Collecting data is the first step in assessment. Assessment is complete when the program has also analyzed and discussed the data and incorporated the findings into action and curricular changes. The assessment cycle should allow time for faculty to engage in all aspects of assessment each year.

For each program learning outcome being assessed the assessment plan should detail where in the curriculum or student experience the student will receive instruction in support of this outcome and where in the curriculum the student should have finally mastered the full outcome. These touchpoints are opportunities to assess learning of the outcome. A solid plan assesses students throughout the curriculum and faculty have the opportunity to contribute data at multiple levels. The U of I Assessment Planning Worksheet can help you develop your plan.

- Baseline/Diagnostic – assessing student knowledge or skill when they enter the program before any instruction has occurred or during a 100-level course open to non-majors. This could also serve as a pre-test with a post-test administered later in the curriculum. This data helps a program understand what students already know and also provides data for measuring growth.

- Formative – assessing student knowledge or skill as they progress through the program to ensure they are making adequate progress toward mastery. For this data, faculty are not yet looking for mastery of the learning outcome. Instead, they are looking for evidence that students are developing or making suitable progress toward mastery. Student may “meet” the expectation with a lower score on an assignment or by achieving a “developing” rating on a rubric.

- Mastery/Summative – assessing student mastery of the learning outcome. For this data, faculty are looking for mastery of the learning outcome. They may be assessing mastery level of the learning outcome (example: all forms of communication) or for a specific component (example: written communication only). A senior capstone project may be evaluated for student achievement of the full outcome statement or several upper-level courses may each evaluate a component of the outcome to collectively cover the whole outcome statement. Students have achieved the outcome or they haven’t.

The following should be considered when developing this plan:

- Definitions of rigor

Definitions of rigor can help faculty with judging student work or performance. Additionally, having shared definitions means that faculty are applying these same definitions across the curriculum. These definitions provide transparency to students, faculty and external reviewers about what is expected for each level of learning. For example, what should students demonstrate when they enter the program (baseline/diagnostic), as they progress through the program (formative/develop) and when they have finally “mastered” the program learning outcome (mastery/summative). When faculty are assessing students using the same definitions your data will be more informative of trends and progress. - Intentionally crafted and sequenced learning activities

Students should have plenty of opportunity to learn and practice what is required of them before they are assessed for mastery of the program learning outcome. - Mapping of learning outcomes

Knowing how course learning outcomes support mastery of the program learning outcomes is important and helpful. Faculty are already assessing the course learning outcomes as part of the course. If the course learning outcome has been identified to align with the program learning outcome, this course level assessment could be used as evidence to support the program learning outcome. Additionally, this mapping helps the program understand where students are learning the content and skills needed to master the program level outcome. It may be helpful to regularly review the course learning outcomes on syllabi and make a map for which courses are introducing knowledge, reinforcing knowledge, or already assessing mastery of the program learning outcomes. By mapping the program learning outcomes with courses this document can be used to plan for different levels of assessment. It can also help the program identify any gaps that might exist. - Multiple methods of assessment

Each program learning outcome should have multiple methods of assessment. Methods are different than assessing at different levels. The assessment plan includes both direct and indirect methods of assessment.- Direct Measure – the student is evaluated for something they have produced or performed. This requires identifying an action the student will engage in which will be evaluated. Examples include assignments, projects, exams and performances. Faculty judge the student’s performance and assign the achievement level or score. Direct measures can be found at all levels of assessment.

- Indirect Measure – the student is evaluated based on their opinion/satisfaction, participation, grades, or data not collected from a student’s demonstration of the program learning outcome specifically. Examples include surveys (how well did the student feel they learned something or how satisfied are they with the instruction of something), course grade, or number of publications. Indirect measures can be found at all levels of assessment.

- External Measure – the student is evaluated on their performance of the program learning outcome outside of the program itself. Examples include a subject-specific exam, licensure exam or employer satisfaction/feedback of graduates. Someone external to the academic program judges the student’s performance and assigns the achievement level or score. External measures are generally at the mastery level as they assess concrete criteria. The student has achieved it or hasn’t.

- Clear linkage between learning outcomes and assessment measures

Someone who looks at the assignment, project, or exam that was used to evaluate the student on the program learning outcome should understand why it was chosen and feel pretty confident that the data collected is evidence that the student accomplished the program learning outcome. Our programs need good data so they can make good decisions that support continuous improvement. The assignment, project, or exam should not seem random. Additionally, if the entire assignment score is being used, then the entire score should reflect the program learning outcome only. Otherwise, a sub score might make more sense. Similar to how faculty develop methods for evaluating students on course learning outcomes, assessments for program learning outcomes should be intentionally designed and clearly linked.

All faculty are asked to engage in assessment of program learning outcomes. In general, faculty are asked to contribute assessment data toward one learning outcome from one course each semester. However, the Department Chair/Program Head may make some exceptions based on the assessment plan and/or ask a faculty member to contribute to the program’s assessment in another way (example: leading analysis or another task). General guidelines are intended to ensure U of I is engaged in a systematic process and to support faculty engagement in program assessment efforts. Programs with an established assessment plan may provide further guidelines to their faculty.

In addition to contributing assessment data, faculty should also be engaged in discussion of the program’s assessment findings. If a single person is tasked with analyzing the data collected, the findings should be shared with all stakeholders in an inclusive and respectful manner. Additionally, decision-makers should be aware of the findings and faculty recommendations so the data can be used to make improvements to the curriculum and program.

Data on program learning outcomes should be reported at the individual student level. This is similar to how students receive an individual grade. A quantitative score for how well the outcome was performed should be collected for each student in the program (when possible). The score can be a percentage or points. The total points are customizable. For example, something could be worth 100 points (on an assignment or a rubric) or maybe something is rated on a 1 -4 scale (1 – beginning, 2 -developing, 3 – meeting, 4 – exceeding).

List of information needed for each assessment: (see example of how this data is entered under “how should the data be reported?”)

- List of students with their scores

- Name of the assessment (assignment, exam, project name, etc.)

- Level of assessment (baseline/diagnostic, formative, or mastery/summative)

- Scale type (pass/fail, or standard scale)

- Scoring type (are the scores percentages or points)

- Threshold (what score is considered to demonstrate the student has “met” the outcome)

- Faculty comments on the assessment (optional)

- Evidence or documentation such as a copy of the assignment, or an example of student work (optional)

The University of Idaho’s assessment management system, Anthology, offers various tools for collecting assessment data from faculty. This is because many of our programs are unique or have an established process we need to support. To reduce confusion for faculty, Department Chairs/Program Heads should communicate to faculty how the data will be reported including which tool and/or method using Anthology.

If you are unfamiliar with the options available, please review the pros and cons for each one. You can also use the Assessment Reporting Method Recommendation Tool at the bottom of this page to get a recommendation based on your answers to the questions it asks

If you already know which option is best for your program, view the instructions.

Option 1: Entering data at the program level (major/degree)

Pros

- Everyone in the program uses the same instructions to enter data

- Minimal coordination or set-up required

- Does not require data be associated with a specific course, such as survey or external exam data

- It is possible to enter someone else’s data on their behalf

- Faculty data is aggregated for each outcome and visible to chair

Cons

- Access to the program-level must be requested for each faculty member (only once)

- No system-generated curriculum map

- Everyone in the program can view and edit all of the data

- Faculty may not know which learning outcome they should report data on

- Must manually look up and add students to each assessment

Option 2: Assigning program learning outcomes to course sections

Pros

- System generates a curriculum map

- Familiar method for faculty who also report data on General Education learning outcomes

- System generates an email reminder to faculty to enter data

- It is possible to enter someone else’s data on their behalf

- Uses default permissions

- Limits who can view or enter data

- Students can automatically be added to an assessment using the CLASS ROSTER button

- Faculty data is aggregated for each outcome and visible to chair

Cons

- Moderate coordination and set-up required each semester

- All data must be entered at the course-level. General survey data or external exam data not associated with a course must be added directly to the annual report in Anthology Planning.

Option 3: Relating course learning outcomes data with program learning outcomes data

Pros

- No up-front set-up required

- Uses default permissions

- Limits who can view or enter data

- System generates a curriculum map

- Supports Anthology Rubric data, if the program or an individual faculty member uses this tool

- A course learning outcome can be related to more than one program learning outcome

Cons

- Data collected might not align with program learning outcome at all

- Faculty narratives focus on course learning outcomes only

- Faculty must enter their course learning outcomes into the system before they can report data

- Faculty data is not aggregated for each outcome nor automatically visible to chair

- Substantial coordination required to relate course and program learning outcomes

- May not be the best option for a program experiencing turnover

- Faculty may be less exposed to the program learning outcomes

Option 4: Using Signature Assignments in Anthology Rubrics

Pros

- Targets specific program learning outcomes and quickly collects common data across course sections

- Rich analysis possible

- Rubrics are direct measures

- Uses default permissions

- Small learning curve

- Faculty can choose which course section and/or assignment to use the rubric on

- Minimal coordination once established

- System generates an email reminder to faculty to enter data

- Faculty do not need to login to Anthology Outcomes

- Rubric can be very simple, or more complex – depending on program’s needs

- Rubrics can be reused, and repurposed.

- The AAC&U rubrics are preloaded for use or adaptation for quick rubric creation

- Single rubric can provide data for multiple program learning outcomes, or even all outcomes.

Cons

- Requires creating standard rubrics for your program learning outcomes.

- Requires department chair/head to manage the rubric. They must do some basic set up each semester and add faculty to the rubric, so they get the email with link.

- The department chair or head must make the connection in Anthology Outcomes with the rubric before the data will flow in.

Data can be entered into Anthology Outcomes various ways, depending on the type of data your program collects. Part 1 of the instructions applies to all programs. Part 2 gives instructions for setting up the various reporting methods. If you are unsure which option is best for your program or faculty, please be sure to view the pros and cons under “Which data collection method is best for our program and faculty?”

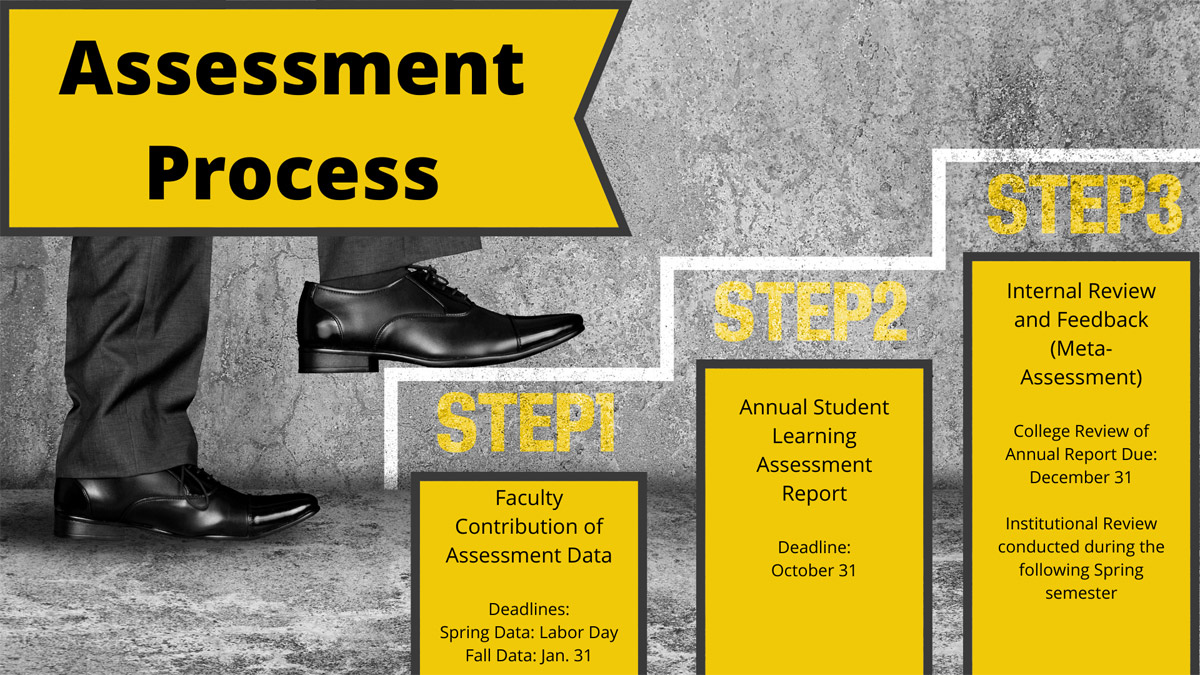

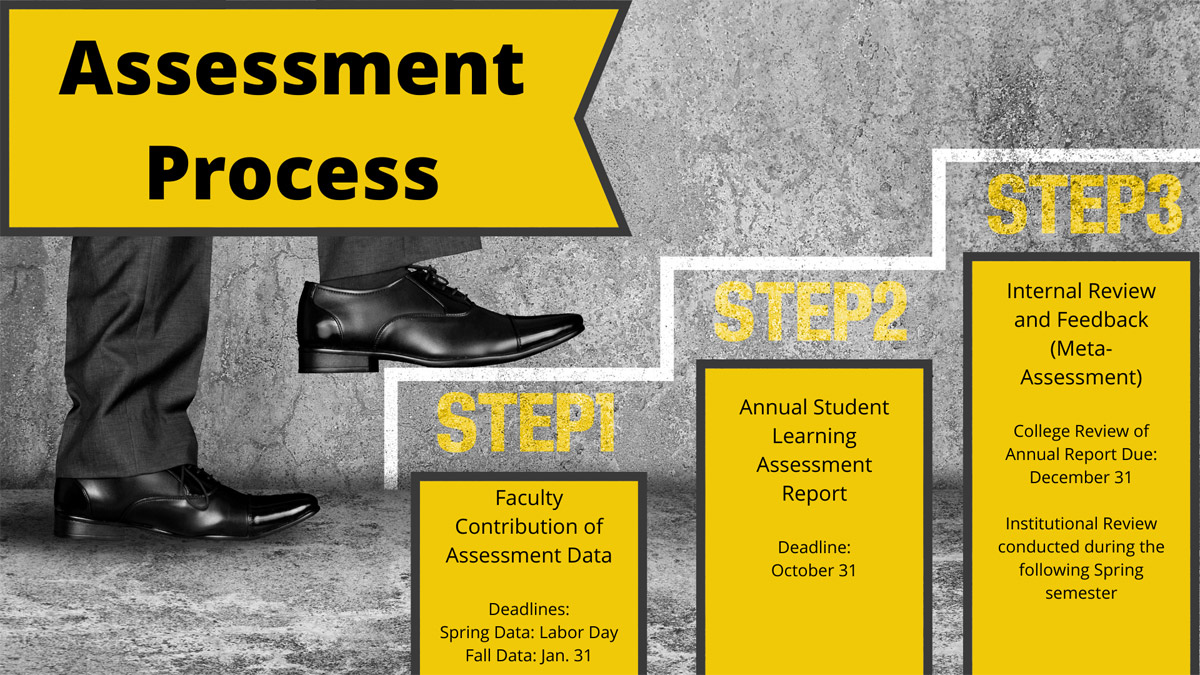

A general deadline is shared annually for all programs and faculty.

- Step 1: Faculty Deadline for Entering Data into Anthology Outcomes

Faculty provide data for 1-2 program or general education learning outcomes each semester (Spring and Fall). Data can be entered anytime before the deadline. The deadline each semester is approximately 2 weeks after the next semester begins.

To simplify these dates, data is always due on the dates specified below:

Labor Day for Spring Semester data

Jan. 31 for Fall Semester data

- Step 2: Department Chair Deadline for Completing the Annual Student Learning Assessment Report (APR) is Oct. 31

Department Chairs/Program Heads are tasked with ensuring results of program learning outcomes assessment are reviewed by program faculty and used to inform programs. It can be helpful to establish practices for reviewing the curriculum, analyzing student learning and planning for instructional improvement.

These practices should be well-documented and may include:

- Ensuring the impacts of curricular decisions on programs of study and its outcomes are carefully reviewed

- Consulting faculty from other disciplines when reviewing data and/or discussing opportunities for improvement

- Consulting with learning support services – both specific to the program and institution-wide – on academic needs identified from assessment efforts (including indirect measures such as surveys or other student opinions/feedback)

- Consulting with an advisory and/or alumni group

- Engaging students in a focus group or other feedback session when reviewing the findings or identifying opportunities for improvements

Annual program assessment reporting is done in Anthology Planning and is one section of the academic program review process. Program review evaluates the overall “program,” often called a department, and includes a summary report on each “program of study” assessment plan. The Department Chair/Program Head may be tasked with completing the program assessment report template for one or more “programs of study” each year. The data collected from faculty, along with other data points the program tracks, are analyzed and summarized in this report.

Directions: Completing the Annual Assessment Report in Anthology Planning

Examples of past reports and details on how assessment reports are evaluated for quality are available on the Meta-Assessment webpage.

Anthology Assessment Tools

Anthology is the U of I’s assessment management system. Users select from the available assessment tools after logging into the system.

On the Anthology homepage you can access all Anthology assessment tools. All U of I employees and students have access to Anthology and can login using U of I credentials (same as MyUI). The homepage looks like this:

You can access these tools by clicking on the corresponding tile from the homepage. You can switch between tools by clicking on the colorful bar chart icon in the upper left-hand corner of most screens to return to the homepage. Users can have multiple tabs and/or multiple Anthology tools open at once.

The following tools are used for learning outcomes assessment:

| Anthology Tool | Description | Supports U of I Process | General Users |

|---|---|---|---|

| Anthology Outcomes | Data collection tool; used to collect data on a specific learning outcome | Learning Outcomes Assessment | Faculty, Staff, Department Chairs/Program Heads |

| Anthology Rubrics | Data collection tool; used to collect individual student data using a rubric | Learning Outcomes Assessment | Faculty |

| Anthology Planning | Reporting tool; used to produce annual reports at U of I | Learning Outcomes Assessment; Annual Program Review | Department Chairs, Administrators |

| Anthology Compliance Assist | Reporting tool; used for accreditation report writing and student service program reviews | Accreditation, Annual Program Review (student services only) | Staff, Administrators |

| Anthology Baseline | Data collection tool; used to collect and share survey data | General Assessment, Accreditation | Faculty, Staff, Department Chairs, Administrators |

| Anthology Insight | Data visualization tool; used to analyze data from other Anthology tools and Banner | General Assessment, Accreditation | Department Chairs, Administrators |